It's always recommended to backup your database, because you never know...

If the service you're using doesn't do automated backups, you can easily schedule it with GitHub actions.

For example, I have a daily schedule to backup my Postgres db to google cloud storage.

You will first need to create a Google Service Account for GitHub, steps are similar as the ones with setting up google secret manager to github.

You need to assign Storage Object Creator role!

My Github action looks like this:

name: Backup Database to GCS

on:

schedule:

- cron: '0 3 * * *'

workflow_dispatch:

jobs:

backup:

runs-on: ubuntu-latest

env:

NODE_ENV: production

GCP_PROJECT_ID: ${{ secrets.GCP_PROJECT_ID }}

GOOGLE_APPLICATION_CREDENTIALS_JSON: "${{ secrets.GOOGLE_APPLICATION_CREDENTIALS_JSON }}"

steps:

- uses: actions/checkout@v4

- name: Auth gcloud SDK

uses: google-github-actions/auth@v2

with:

credentials_json: ${{ env.GOOGLE_APPLICATION_CREDENTIALS_JSON }}

- name: Install gcloud SDK

uses: google-github-actions/setup-gcloud@v2

with:

project_id: ${{ env.GCP_PROJECT_ID }}

- name: Read secrets into environment

run: |

export POSTGRES_CONN="$(gcloud secrets versions access latest --secret=POSTGRES_CONN)"

export GCS_BUCKET="$(gcloud secrets versions access latest --secret=GCS_BUCKET)"

echo "::add-mask::$POSTGRES_CONN"

echo "::add-mask::$GCS_BUCKET"

echo "POSTGRES_CONN=$POSTGRES_CONN" >> $GITHUB_ENV

echo "GCS_BUCKET=$GCS_BUCKET" >> $GITHUB_ENV

- name: Install PostgreSQL 17 client tools

run: |

sudo sh -c 'echo "deb http://apt.postgresql.org/pub/repos/apt $(lsb_release -cs)-pgdg main" > /etc/apt/sources.list.d/pgdg.list'

wget --quiet -O - https://www.postgresql.org/media/keys/ACCC4CF8.asc | sudo apt-key add -

sudo apt-get update

sudo apt-get install -y postgresql-client-17

- name: Backup Postgresql

run: |

TIMESTAMP=$(date +"%Y-%m-%d_%H-%M-%S")

BACKUP_FILE=db_backup_$TIMESTAMP.sql

pg_dump "${{ env.POSTGRES_CONN }}" > $BACKUP_FILE

echo "Backup file created: $BACKUP_FILE"

gcloud storage cp $BACKUP_FILE gs://${{ env.GCS_BUCKET }}/This workflow file is scheduled to run at 3am UTC, or can be run manually.

It will first read configuration from google secret manager and export the variables into GitHub environments. Further, postgresql 17 client is installed. Last step is the actual export of the postgresql database and backup to google cloud storage.

For this exact example, your github service account needs Secret Manager Accessor and Storage Object Creator roles.

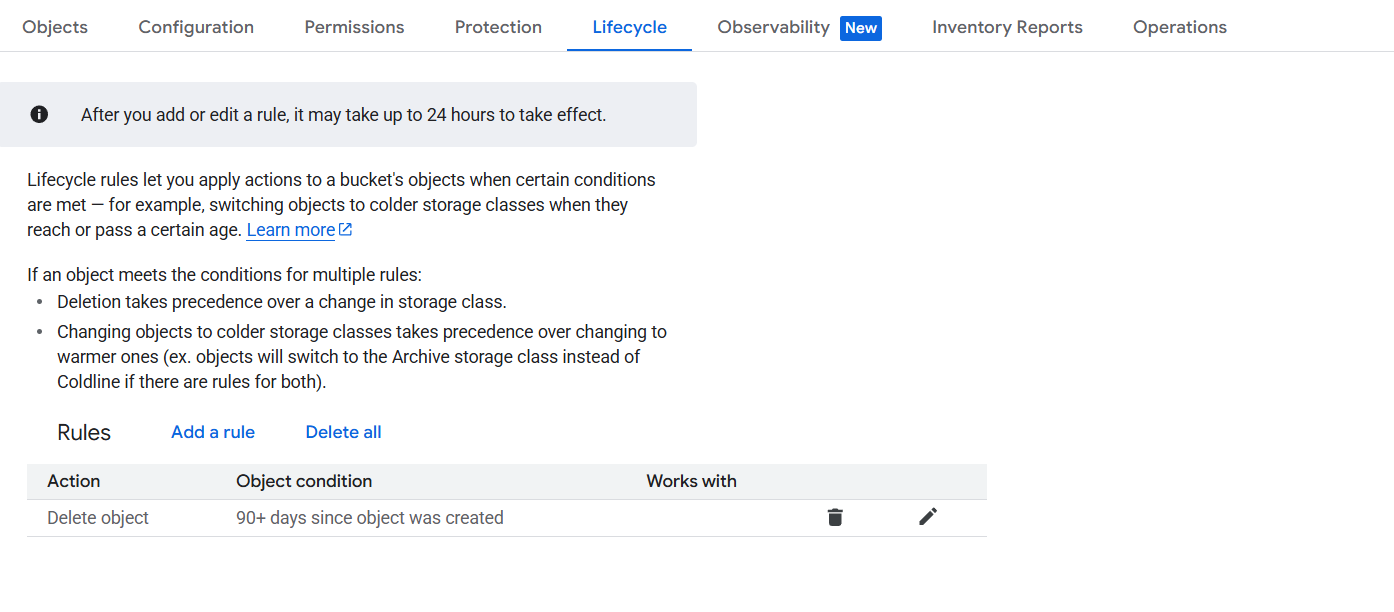

Oh, one more thing...Your backups will pile up unless you set up cleanup policy.

Go to google cloud storage, select your bucket, lifecycle and add a rule to delete the object.

And last, test that what you've got in backups is actually a valid db export.

Youtube: https://youtu.be/OOPhlNm-PKM